Neither the US is releasing crocodiles to stop the migration nor the Louvre on fire: how to tell if an image was created using AI | Technologies

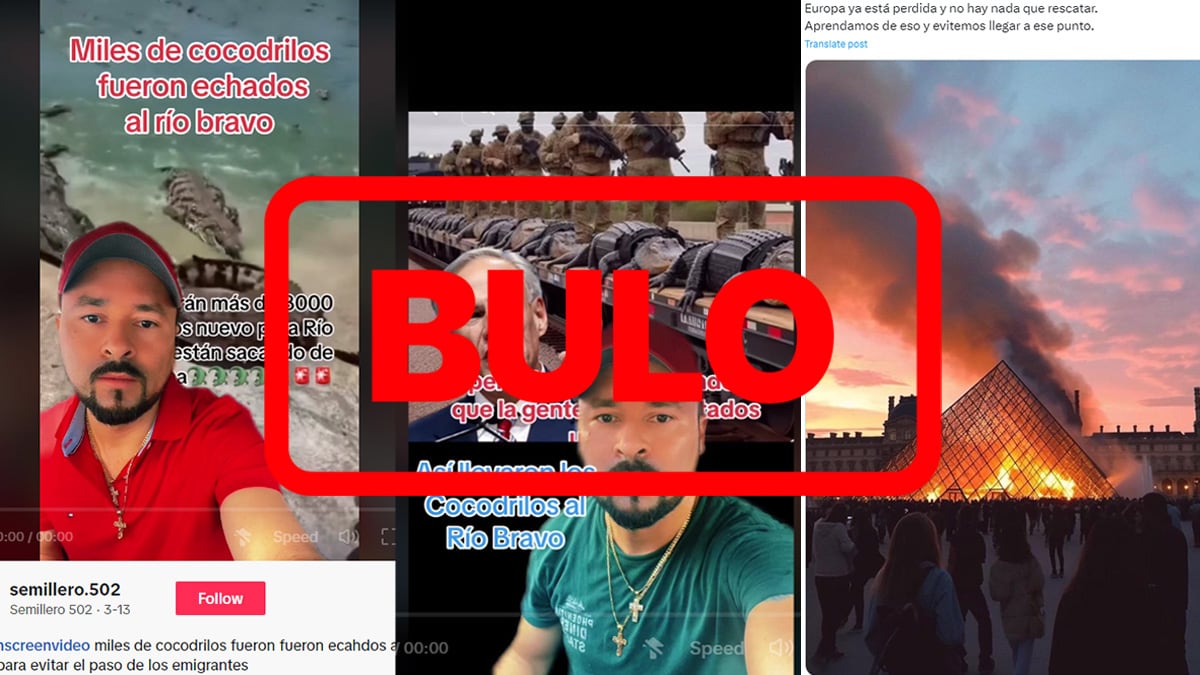

The United States also did not release “8,000 crocodiles into the Rio Grande” to prevent migrants from crossing, or burn down the Louvre pyramid. Nor did they find a giant octopus on the beach, Big Foot or the Loch Ness Monster. All this misinformation comes from images created by artificial intelligence. As tech companies like Google, Meta and OpenAI try to detect AI-generated content and create tamper-proof watermarks, users are faced with a difficult task: determining whether images circulating on social media are real or not. While this can sometimes be done with the naked eye, there are tools that can help in more complex cases.

Generating images with the help of artificial intelligence is becoming easier. “Today, anyone without any technical skills can type a sentence into a platform like DALL-E, Firefly, Midjourney or another message-based model and create a hyper-realistic piece of digital content,” says Jeffrey McGregor, CEO Director of Truepic. one of the founding companies of the Coalition for Content Provenance and Authenticity (C2PA), which seeks to create a standard to verify the authenticity and provenance of digital content. Some services are free, while others don’t even require an account.

“AI can create incredibly realistic images of people or events that never happened,” says Neil Kravetz, founder of Hacker Factor Solutions and FotoForensics, a tool for checking whether an image may have been altered. In 2023, for example, an image of Pope Francis wearing a Balenciaga puffer jacket and other images of former US President Donald Trump running from police to avoid arrest went viral. Kravets points out that these types of images can be used to influence opinions, damage someone’s reputation, create misinformation and create false context for real-life situations. Thus, “they can undermine trust in reliable sources.”

Artificial intelligence tools can also be used to misrepresent people in sexually compromising positions, committing crimes, or accompanying criminals, as noted by V.S. Subrahmanian is a professor of computer science at Northwestern University. Last year, dozens of minors in Extremadura reported that fake nude photos of them, created by artificial intelligence, were being circulated. “These images can be used to extort, blackmail and destroy the lives of leaders and ordinary citizens,” says Subrahmanian.

And not only this. AI-generated images can pose a serious threat to national security: “They can be used to divide a country’s population by pitting one ethnic, religious or racial group against another, which could lead to long-term unrest and political unrest.” . Josep Albors, director of research and awareness at Spanish computer security company ESET, explains that many hoaxes rely on these types of images to spark controversy and reaction. “In an election year in many countries, this could tip the scales one way or the other,” he says.

Tricks for detecting AI-generated images

Experts like Albors advise being suspicious of everything in the online world. “We have to learn to live with the fact that this exists, with the fact that AI is generating parallel realities, and just remember that when we receive content or see something on social media,” says Tamoa Calzadilla, editor-in-chief. Factchequeado, Maldita.es and Chequeado initiatives to combat Spanish-language disinformation in the United States. Knowing that an image may have been created by AI “is a great step to avoid being deceived and sharing misinformation.”

Some of these types of images are easy to spot just by looking at details of the hands, eyes or face, says Jonathan Pulla, a fact checker at Factchequeado. Such is the case with the AI-generated image of President Joe Biden in military uniform: “From the different skin tones on his face, the phone cables going nowhere, and his forehead, you can tell that this is a disproportionate death of one of the soldiers who appears in the image.” .

He also cites several manipulated images of actor Tom Hanks posing in T-shirts with slogans for or against Donald Trump’s re-election. “This (Hanks) has the same pose and only changes the text on the shirt, the fact that his skin is very smooth and his uneven nose indicates that they may have been created using digital tools such as artificial intelligence,” – Maldita.es explains. verifiers about these images, which went viral in early 2024.

According to Albors, many AI-generated images can be identified by the naked eye by a trained user, especially those created using free tools: “If we look at the colors, we will often notice that they are not natural, that everything looks like plasticine and that even some components of these images merge with each other, such as facial hair or different clothes with each other.” If the image is of a person, the expert suggests also looking to see if there is anything abnormal about the person’s limbs.

Although the first generation of imagers made “simple mistakes”, they improved markedly over time. This is pointed out by Subramanian, who emphasizes that in the past they often depicted people with six fingers and unnatural shadows. They also misrepresented street and store signs, making “absurd spelling errors.” “Technology today has largely overcome these shortcomings,” he says.

Tools to detect fake images

The problem is that now, “for many people, an AI-generated image is already virtually indistinguishable from the real thing,” as Albors points out. There are tools that can help identify these types of images, such as AI or NOT, Sensity, FotoForensics or Hive Moderation. OpenAI is also building its own tool to detect content created by its DALL-E image generator, it announced in a May 7 statement.

These types of tools, Pulla said, are useful as a complement to surveillance, “because sometimes they are not very accurate or do not detect some of the images generated by the AI.” Factchequeado verifiers typically use Hive Moderation and FotoForensics. Both are free to use and work the same way: the user uploads a photo and asks to examine it. While Hive moderation shows the percentage of likelihood that content was created by artificial intelligence, FotoForensics results are more difficult to interpret for a human without prior knowledge.

By uploading an image of crocodiles that were supposedly sent by the US to the Rio Grande to prevent migrants from crossing, the Pope in a Balenciaga coat, or one of the images of a Satanic happy lunch at McDonald’s, Hive Moderation scores 99.9%. the likelihood that both were created by AI. However, a manipulated photo of Kate Middleton, Princess of Wales indicates that the probability is 0%. In this case, Pulla found Fotoforensics and Invid programs useful, which “can show certain altered image details that are not visible.”

But why is it so difficult to know if an image was created using artificial intelligence? According to Subramanian, the main limitation of such tools is the lack of context and prior knowledge. “People use their common sense all the time to separate real claims from false ones, but machine learning algorithms for detecting deepfake images have made little progress in this regard,” he says. The expert believes it will become increasingly less likely to know with 100% certainty whether a photo is real or created by artificial intelligence.

Even if a detection tool were accurate 99% of the time in determining whether content was created by artificial intelligence or not, “that 1% gap on an Internet scale is huge.” In just one year, AI created 15 billion images. This is evidenced by a report from Everypixel Journal, which highlights that “AI has already created as many images as photographers have taken in 150 years.” “When all it takes is one convincingly fabricated image to undermine trust, 150 million undetected images is a pretty alarming number,” McGregor says.

Beyond pixel manipulation, McGregor points out that it’s also nearly impossible to determine whether an image’s metadata—time, date, and location—is accurate once it’s been created. The expert believes that “digital content provenance, which uses cryptography to tag images, will be the best way for users in the future to determine which images are original and have not been altered.” His company Truepic says it has launched the world’s first transparent deepfake of these brands – with information about its origin.

Until these systems are widely adopted, it is important that users take a critical stance. The guide, produced by Factchequeado with support from the Reynolds Institute of Journalism, has 17 tools to combat disinformation. Among them there are several for checking photos and videos. The key, Calzadilla says, is to realize that none of them are infallible or 100% reliable. Therefore, to determine whether an image was created using AI, one tool is not enough: “Verification is carried out using several methods: observation, the use of tools and classical journalistic methods. That is, contact the original source, look through their social networks and check whether the information attributed to them is true.”

You can follow El Pais Technology V Facebook And X or register here to receive our weekly newsletter.